Desktop sized "cloud"

Or, here's my pet mini-cloud

I first used VMWare workstation as a development tool somewhere around 20003. It was a cost effective way to test the software I was working on against quirks of different operating systems. On one linux desktop I could have all my development tools, including a few Windows95 installations.

So, now you're asking - "what does that have to do with clouds?" Well, no much yet.

For my current project (Crowbar) I found myself needing a setup that includes:

Oh, and the mix changes at least twice weekly if not a bit more.

That list is probably a bit too long (though if I was more thorough it would take 2 pages). Imagine how would you build that? How much would it cost to setup? How many cables would you have to run down to make a change?

The most relevant quote I could find (thanks google) , is from Walter Chrysler:

"Whenever there is a hard job to be done I assign it to a lazy man; he is sure to find an easy way of doing it."

And I am lazy. And VMWare ESXi is a great solution for lazy people... standing up a new server by cloning an existing one is so much easier than racking one up and running all the cables (not to mention the paperwork to buy it). Making networking changes is a cinch too - just create the appropriate virtual switch and add virtual NIC's on that network to all the machines that need access.

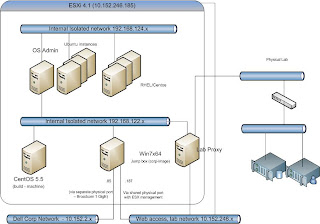

Here's a simplified setup, and some notes:

A few observations and attempts to provide logic to the madness:

As was famously said - clouds still run on metal ... so here are the metal specs:

But is it a cloud?

Some of the popular definitions for cloud involve the following criteria:

I first used VMWare workstation as a development tool somewhere around 20003. It was a cost effective way to test the software I was working on against quirks of different operating systems. On one linux desktop I could have all my development tools, including a few Windows95 installations.

So, now you're asking - "what does that have to do with clouds?" Well, no much yet.

For my current project (Crowbar) I found myself needing a setup that includes:

- Multiple OS flavors

- Win XP for access to corporate resources

- ubuntu 10.10 and 11.04 - main testing targets

- Redhat and Centos - for some dev work and testing

- Varying numbers of machines, pretty much depending on the day

- For basic development 3 machines - development, admin node and a target node

- For swift and nova (components of openstack) related development - at least 5, sometimes a few more

- Weird-ish network configurations

- Network segments to simulate public, private and internal networks for nova and swift

- An isolated network for provisioning work (avoid nuking other folks' machines)

- Corporate network... must have the umbilical cord.

Oh, and the mix changes at least twice weekly if not a bit more.

That list is probably a bit too long (though if I was more thorough it would take 2 pages). Imagine how would you build that? How much would it cost to setup? How many cables would you have to run down to make a change?

The most relevant quote I could find (thanks google) , is from Walter Chrysler:

"Whenever there is a hard job to be done I assign it to a lazy man; he is sure to find an easy way of doing it."

And I am lazy. And VMWare ESXi is a great solution for lazy people... standing up a new server by cloning an existing one is so much easier than racking one up and running all the cables (not to mention the paperwork to buy it). Making networking changes is a cinch too - just create the appropriate virtual switch and add virtual NIC's on that network to all the machines that need access.

Here's a simplified setup, and some notes:

A few observations and attempts to provide logic to the madness:

- Corporate IT does not really like it to have machines they're not familiar with on their network (i.e. non-corp image). On a network as large as Dell's it's hard to complain about it. That mandates the isolation of all but the one machine from the corp net.

- The WinXP image is a bastion host of sorts - it is there to provide access to the environment. It is also used to manage the ESX server itself

- Access to multiple physical labs is achieved in 2 ways:

- Multiple NIC's in the ESX server (6 total 1GigE ports)

- VLan tagging on the virtual NIC's. The access switch to the isolated labs uses the incoming VLAN ID to select the correct environment

ESX configuration

The test VM's are configured with the equivalent of a AWS tiny to small instances (i.e.1-2 Gb RAM, 2 virtual cores) depending on their workloads. The actual development VM's are beefier, more like a large (7 Gb RAM, 4 cores).

The server is configured with 2 resources pools - one for "Access" and one for pretty much everything else. The intent is to ensure that whatever crazy things are going on in the test VM's (anyone ever peg a CPU @100% for a bit?), I can keep working.As was famously said - clouds still run on metal ... so here are the metal specs:

- 2 socket, 4 core Xenon @ 2.4Ghz

- 24 Gb Ram

- 500Gb 15k SAS disks

With this hardware I've been running up to 12 VM's, and being somewhat productive. My biggest complaint is about Disk IO performance, especially seeing that DVD sized images fly all over the place. To solve that, I'll be migrating this setup to a Dell PEC 2100 box, with 12 spindles configured in a RAID 1E setup.

But is it a cloud?

Some of the popular definitions for cloud involve the following criteria:

- Pay-per-use

- On-demand access to resources, or at least the ability of the resource user to self provision resources

- Unlimited resources, or at least the perception thereof

For the desktop sized cloud, money is not a factor. so scratch that.

Since I pretty much configure ESX at will, I think I get a check on the "on-demand" aspect. Yes, I mostly use the vCenter user interface, nothing as snazzy as the AWS API's. In previous life I've used the VMWare SDK (the perl version), and was pretty happy with the results. Its just that most of the changes to the environment fall into too many buckets to justify spending the time trying to automate them

Now, my current server is far from having unlimited resources....but unless you deal with abstract math - there is no real unlimited-ness. What actually matters is the ratio of: "how much I need" to "how much I have". The oceans are bounded, but if all you need is a few tea cups worth of water, you're probably comfortable considering the ocean an unlimited resource.

Opps... back to servers. Over the past few weeks, I've been taking snapshots of performance metrics. CPU utilization is around 50%, Disk capacity is < 30%. Memory is my current barrier, around 80%.

If I needed to double the size of my virtual setup today, I could probably do that. The physical resources are pretty limited, but the head room they afford me is making me think oceans.

This is by no means AWS scale. But, I think I'll still name my server "cloudi - the friendly desktop mini-cloud"

Comments

Post a Comment